Evaluation & analyses details

8. Top 10 directories

9. Self defined groups

10. Top 10 products

11. Crawled parameters

12. http vs. https crawls

13. Types of googlebots

14. Content types

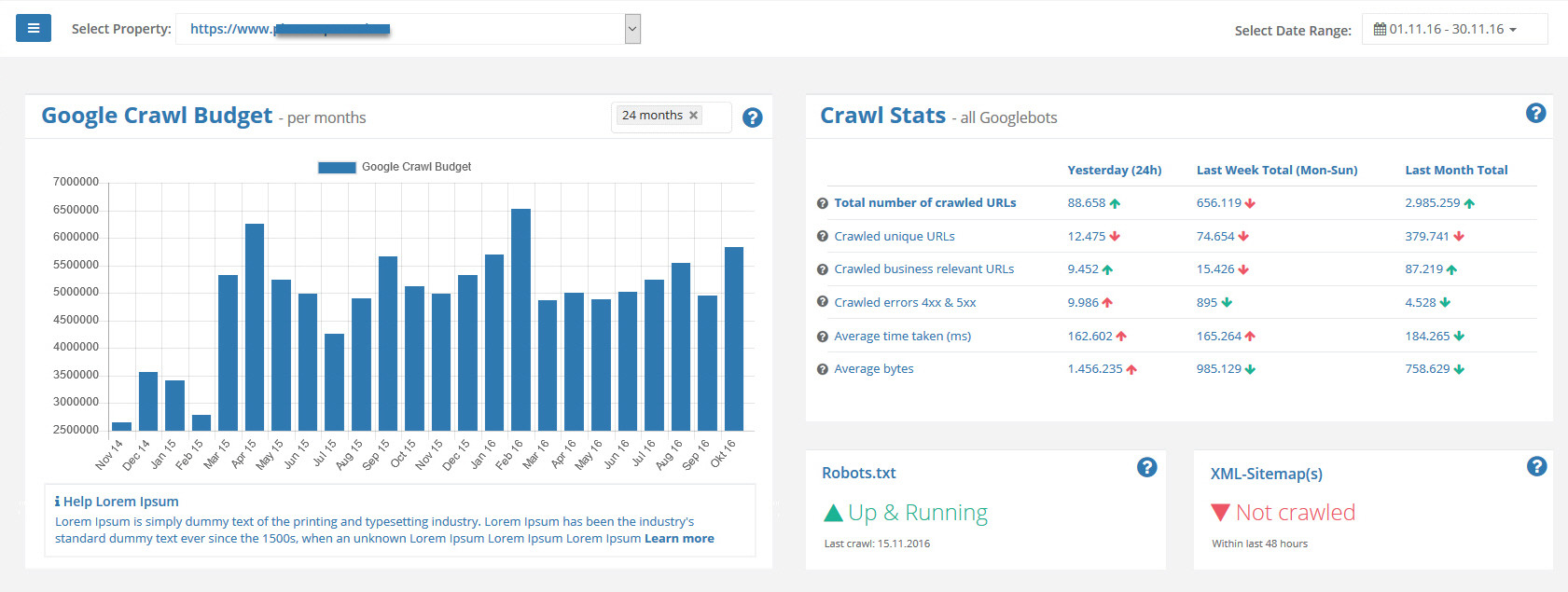

This overview shows how your crawl budget (also called crawl volume) has changed on a monthly base. We chose the duration of an month on purpose to make it easier comparable and to identify trends at a glance. Extreme increase and decrease indicates crawling problems or Google updates. Thanks to log file storing up to 5 years you are able to identify trends, long-term developments and optimization successes.

We purposely define crawl budget different than Google: for us the term crawl budget stands for the total number of Googlebot requests during one month.

Statistics show you further current crawl trends compared to the previous day, week and month. This is the place where all hard facts are collected and compared:

- the total amount of Googlebot request

- amount of crawled unique URLs

- amount of crawled “Business Relevant URLs”

- amount of crawled errors (status codes 4xx and 5xx)

- average download speed of an URL

- average download size of an URL in kilobytes

Finally you are able to check wether your robots.txt and XML-sitemap(s) has been crawled.

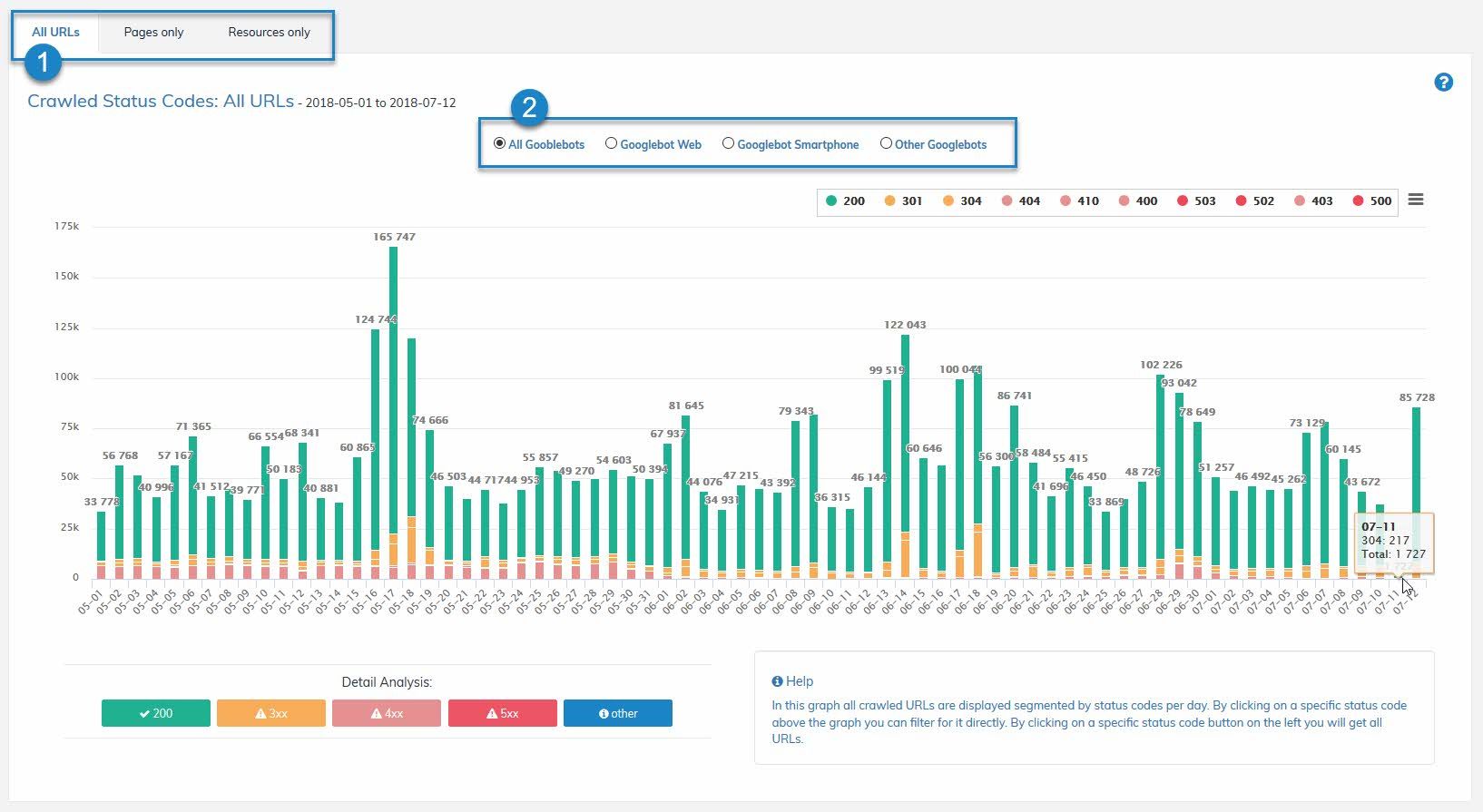

Crawled status codes shows you the amount of crawled, different status codes on a daily basis, so that you are able to identify crawling errors. You can choose the following filter options additionally:

- all URLs together or just pages or just resources

- Googlebot Web (desktop) or Googlebot Smartphone or different Googlebots

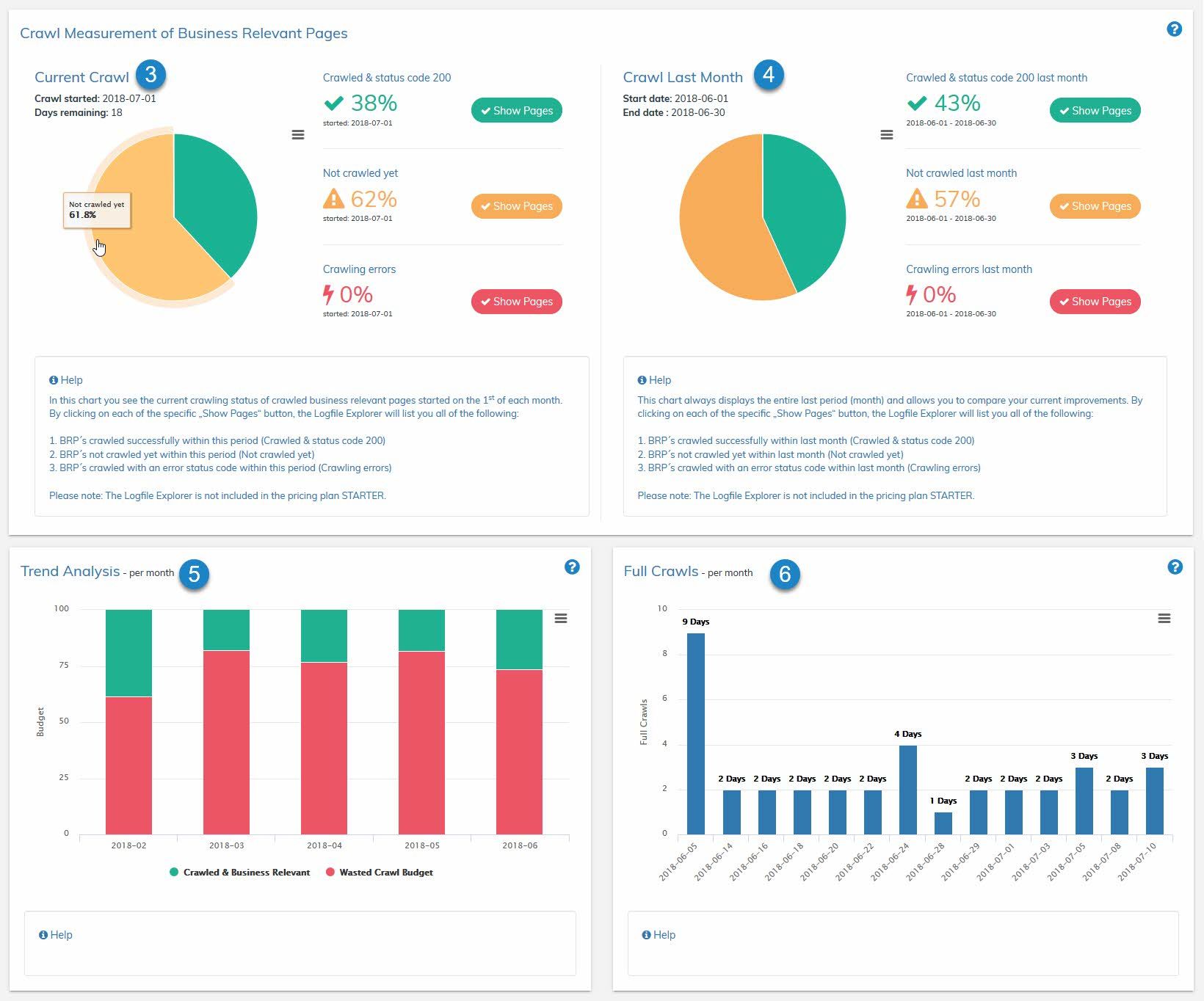

Click on a specific bar within your graph (status code 200 for instance) and you will be transferred automatically to our log file explorer (raw data). Here you can see all requests prefiltered (status code, Googlebot group & date). See the screenshot below.

3. Business Relevant Pages

What are Business Relevant Pages?

We define Business Relevant Pages as all pages of a website, that have importance from a business angle and are meant to fulfill an economic purpose (for instance news articles, category pages, brand pages, detailed product pages and many more). To fulfill its economic purpose, those pages need to produce organic search engine traffic within google search results and last but not least satisfy search intentions of searchers to the maximal extent. Those are the reasons why only Business Relevant Pages should be crawled by bots, every other crawl of non Business Relevant Pages would be a total waste of already limited and expensive Googlebot requests.

This is how you define your Business Relevant Pages with crawlOPTIMIZER

Note: defining Business Relevant Pages is a one time event and should be done at the start.

If your website has one or more XML-sitemap(s), you can add it or them via SETTINGS to crawlOPTIMIZER and all URL entries out of your sitemaps are automatically marked as business relevant in the system.

Go to crawlOPTIMIZER’s SETTINGS to add URLs and or parameters manually in a comments section as Business Relevant Pages (for instance URLs to resources, that Googlebot needs for rendering or old URLs that contain backlinks. Another example are detailed product pages with special tracking parameters that are designed for Google Shopping – these URLs are business relevant too, because they are indexed by the general Googlebot). You can mark URLs as business relevant through REGEX commands too if you go to SETTINGS.

Note: Sometimes you want No-Index-Pages to be crawled because of inheriting link juices. Please keep in mind those rather rare instances.

Once defined, analyzing starts

- Business Relevant Pages (BRP) (see point 1) shows you yesterday’s crawl. Also it shows you the percentage of Business Relevant Pages that Googlebot was able to crawl. With a single click on the pie chart you are able to see your raw data in the log file explorer.

- Trend (see point 2) shows you the development of the last 30 days (default) on a daily basis. At a glance you are able to check if your optimization efforts have already produced results or if there are new crawling issues. If you click on a bar in the graph you will automatically see pre-filtered raw data in the log file explorer. So you are able to see which for instance NON-BRP-URLs were crawled the day before with one click.

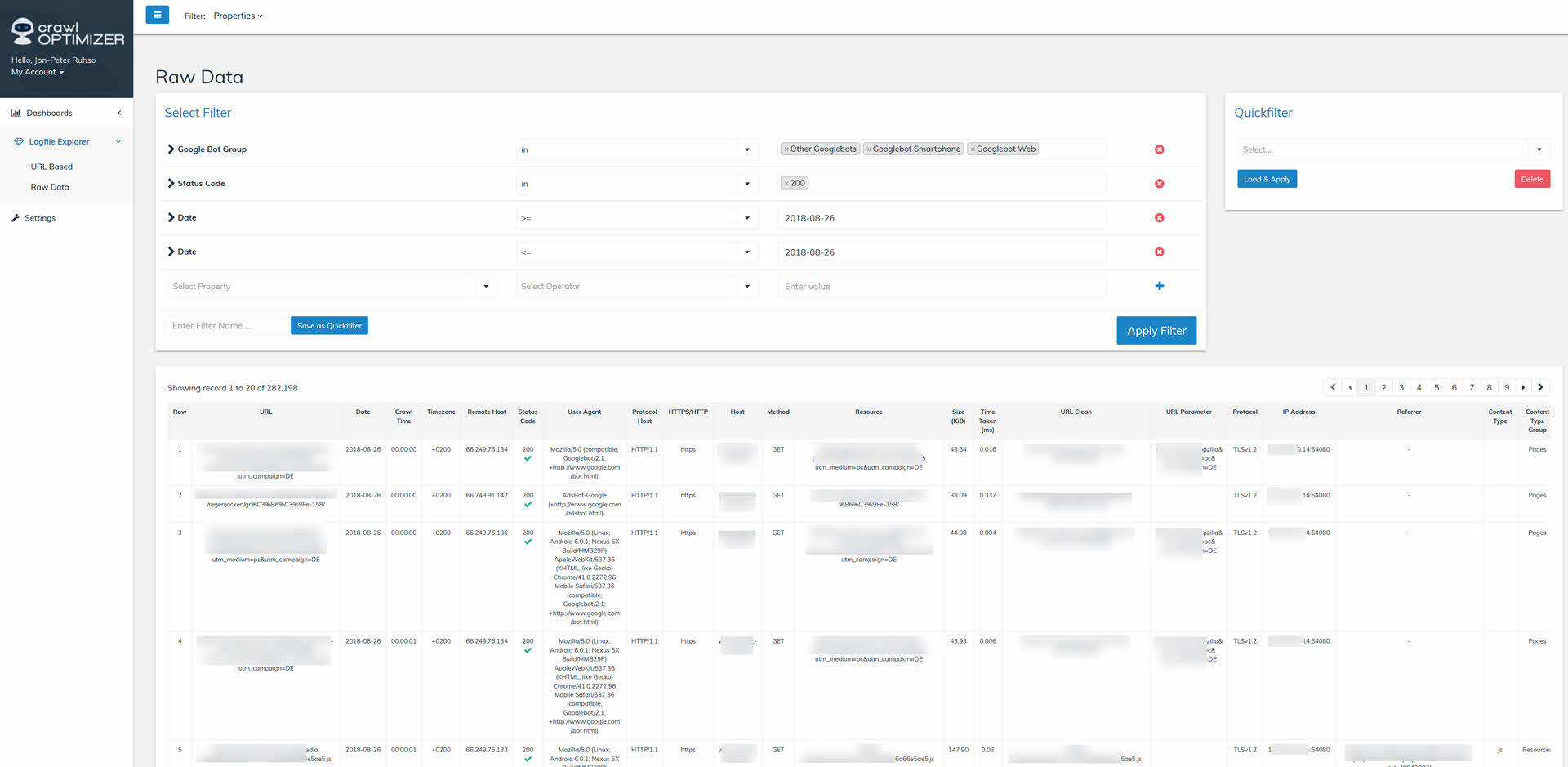

- Current Crawl (see point 3) shows you the percentage of Business Relevant Pages that have been crawled since the first of the month. We are always looking at the whole month to guarantee comparability. If you want to see all URLs just click on the button “Show Pages”.

- If one month has passed you are able to see the values of the previous month under Crawl Last Month (see point 4). View the list in Logfile Explorer with help of the buttons “Show Pages”.

- Trend Analysis (see point 5) gives you an image of previous collected information on a monthly basis so you are able to see long-term development.

- Full Crawls (see point 6) gives you the option to self-define at which percentage a “full crawl” is achieved. 100% are difficult to reach if you have a big website or online-shop because of various page fluctuations. Because of that you should choose a value that represents a “full craw” realistically (for instance 90%). As soon as the value has bin added under SETTINGS, crawlOPTIMIZER starts counting the days until the value (for instance 90%) is reached. As soon as your value has been reached, the days it took are saved and shown in the graph. So you will have insight how long it takes for Google to do a full crawl. Your goal should be to reduce the amount of days with help of various optimization measures.

Note: Always take care of your XML-Sitemap(s) and check them regularly! Your XML-Sitemaps should only list pages that are relevant for search engines and thus business relevant.

Note: Never block URLs from getting crawled that are meant for Google Shopping – Google Shopping ads will be rejected by Google if you do.

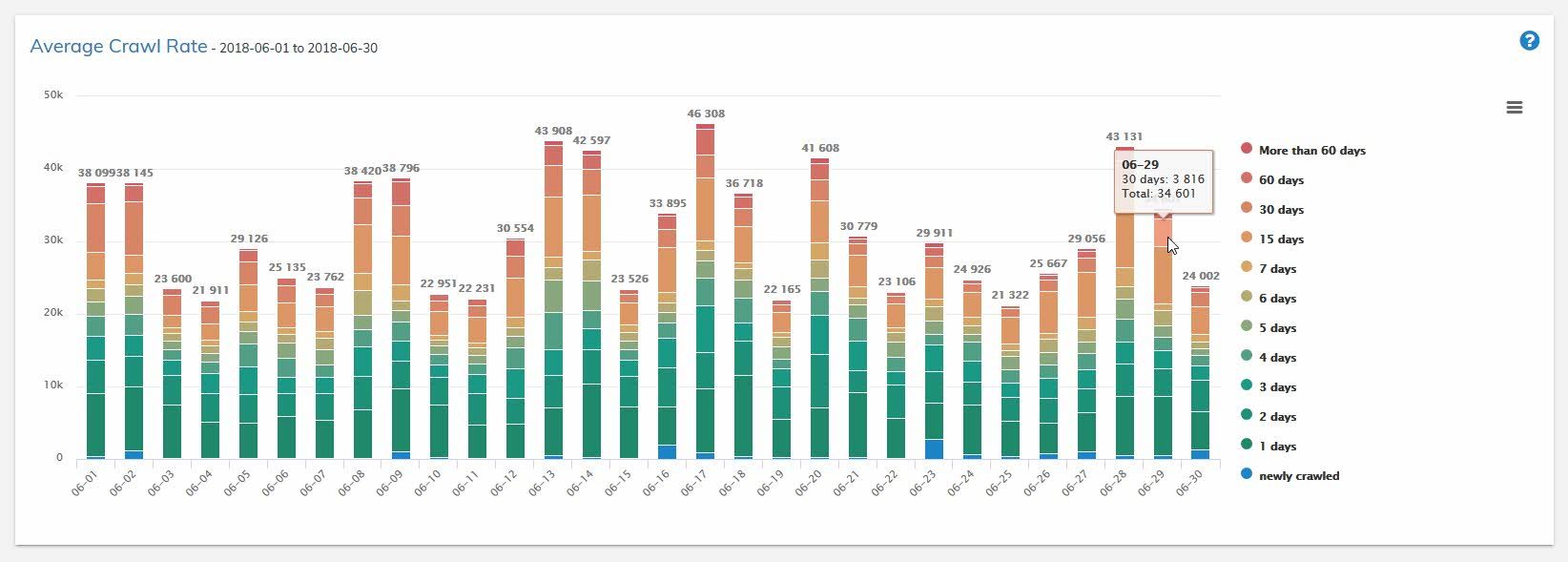

Crawl frequency as defined as the amount of days between two crawls of an URL. This graph shows you the crawl frequency on a daily basis. Click on a color of the bar and get all URLs of the cluster shown pre-filtered automatically in the Logfile Explorer.

Example:

URL “xyz” was crawled on the 25th of May, 2018, and the second time on the 29th of June, 2018 – this equals a crawl frequency of 35 days. You would find this URL in the orange colored section. See screenshot above.

Our graph shows you reasonable clustered crawl frequency of URLs on a daily basis.

Note: You win if you are able to make your Business Relevant Page crawled more frequently than your competition. In any instance you should aim your values at under 15 days and remember: The higher your crawl frequency the better!

Our frequency clustering

more than 60 days

60 days

30 days

15 days

7 days

6 days

5 days

4 days

3 days

2 days

1 day

completely new crawled

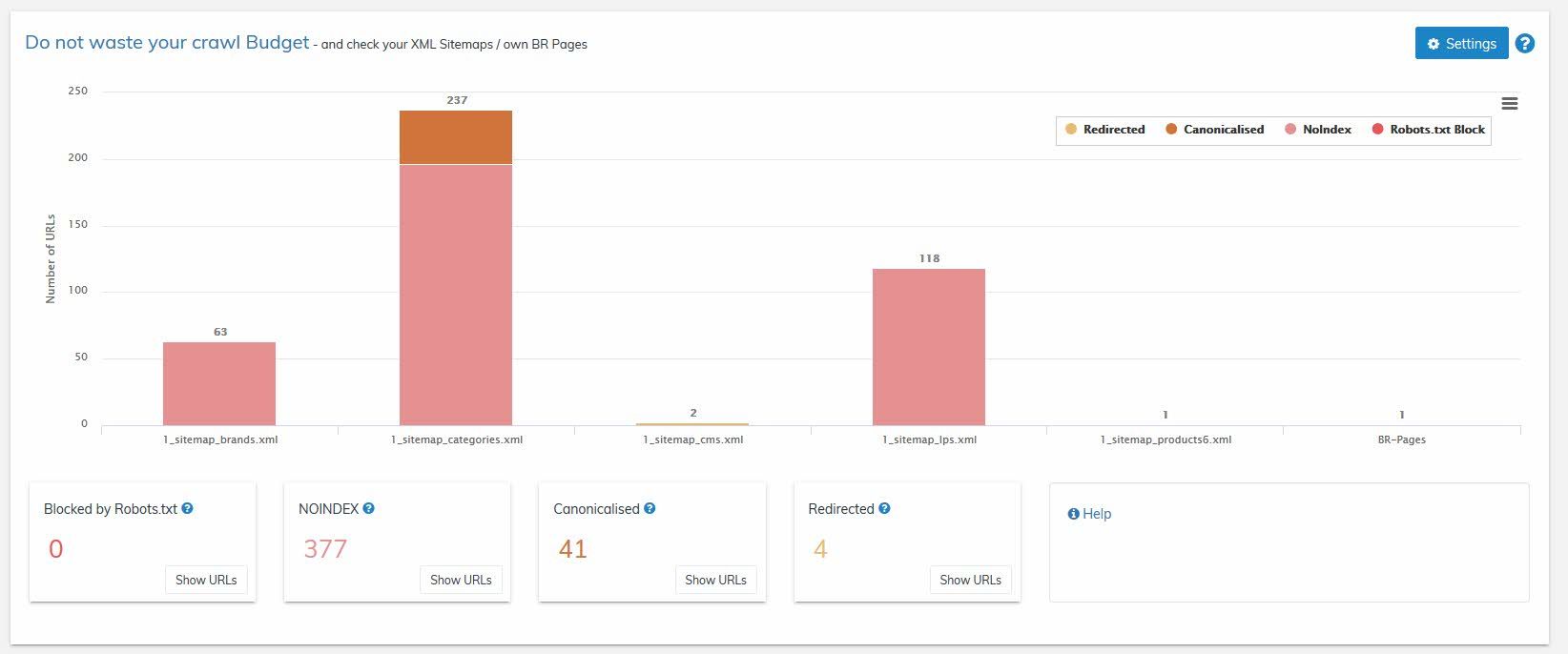

5. Wasting crawl budget

If your website contains XML sitemap(s), you should have deposit them under SETTINGS by now (see point 3 above).

If necessary you may also have deposited Business Relevant Pages manually.

If yes, perfect! Because all of these URLs will be crawled by us once a week. Yes, you have heard right. They will be checked by us regularly.

Note: Don’t waste your precious Google requests with broken URLs.

For this case we especially developed our crawler, that crawls your XML sitemap(s) one a week nightly. Our crawler checks every URL entry inside your sitemap and also your manually deposited URLs. We always check the following values:

- Are URLs included that are blocked because of your robots.txt?

- Are URLs included that shouldn’t be, for instance 404 URLs or redirecting URLs?

- Are URLs included that are marked as NOINDEX in the meta robots?

- Are URLs included that are canonizing to other pages via canonical tags?

If yes, please check these pages, correct or delete them from your sitemaps, because such incorrect URLs waste your precious crawl budget.

You can see incorrect URLs by clicking one of the buttons labeled “Show URLs” whenever you want.

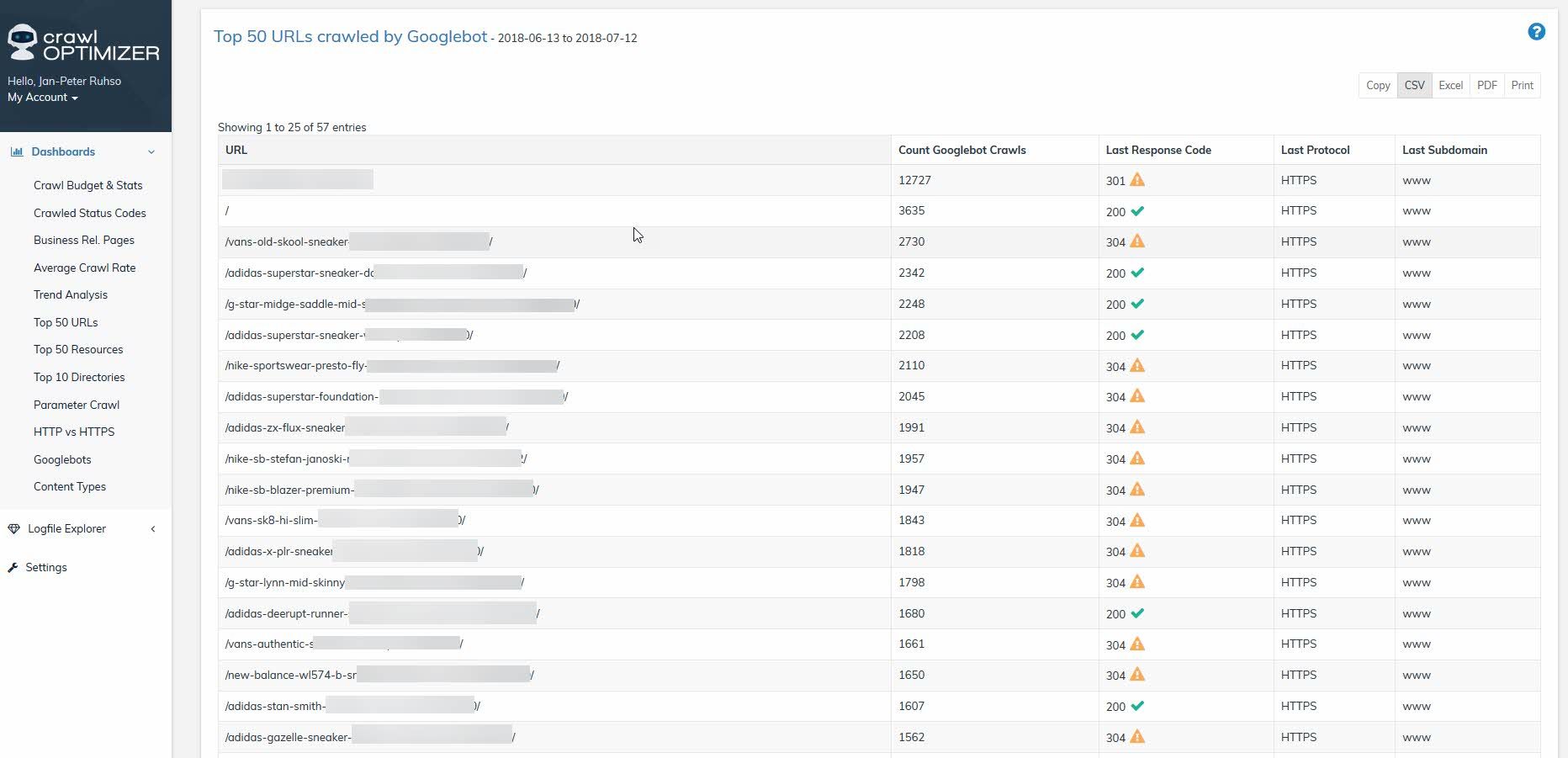

6. Top 50 URLs

This overview shows you your top 50 crawled URLs in a given period of time at a glance. Additionally the last crawled status code is also shown.

By clicking on any URL you will be transferred to the Logfile Explorer (RAW DATA) where all single requests of an URL are listed.

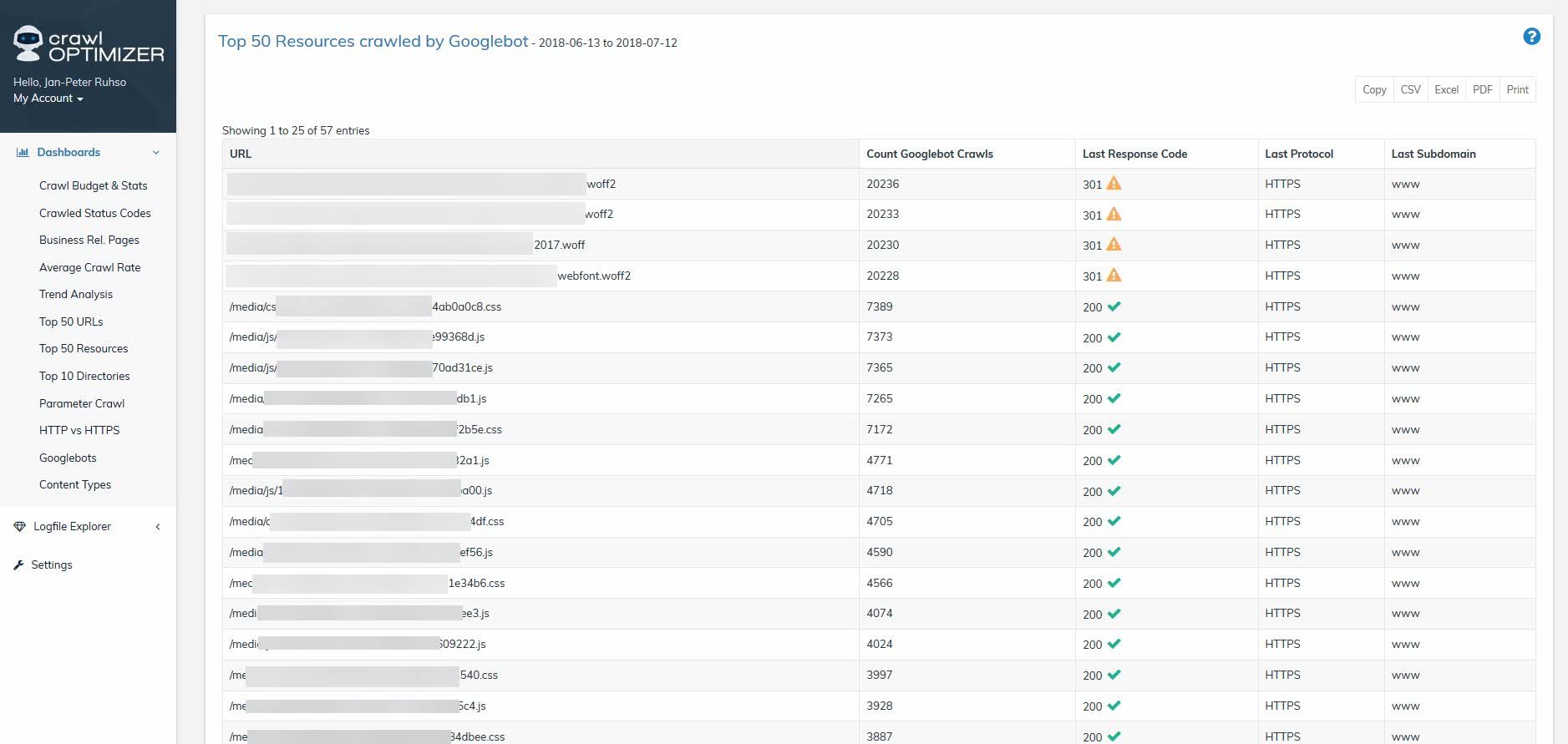

7. Top 50 resources

In contrast to the previous overview here crawled URLs are not listed, but the most crawled resources (js/css/etc.). Also with the last current Status Code.

By clicking on any URL you will be also transferred to the Logfile Explorer (RAW DATA), where all single requests of this URL (resource) are listed.

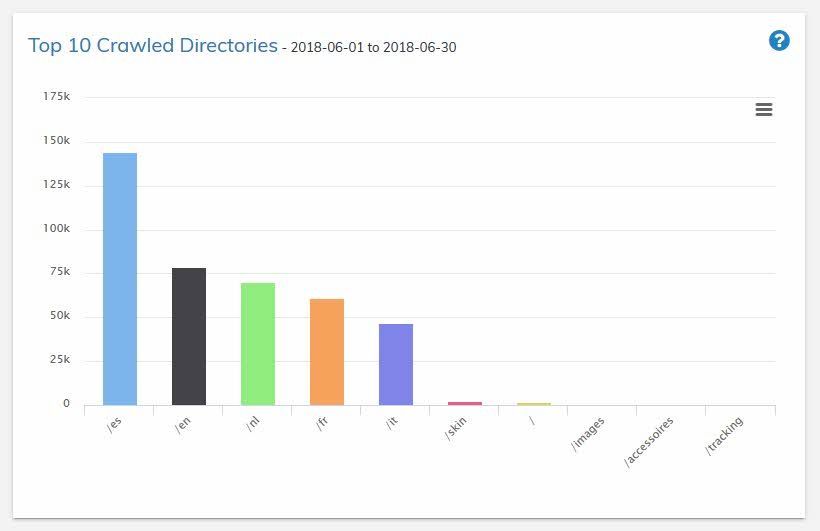

8. Top 10 directories

Which directories are the most crawled? A question that often comes up but is rarely answered.

Our simple graph answers this question at a glance, so you are able to deduce on page optimization measures easily.

You can view all requests of a specific directory in the Logfile Explorer anytime you want. Just click on one of the bars inside the graph.

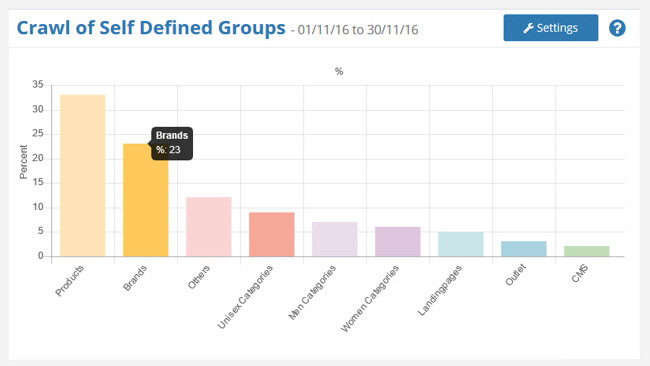

9. Self defined groups

Through a simple REGEX request (in SETTINGS) you can define your own URL groups and thus be able to monitor the crawl frequency of these groups regularly. If you detect that important URL groups are not getting crawled enough, you should view your website architecture from an user point of view followed by an SEO one. Afterwards gradually optimize and measure your improvement regularly using crawlOPTIMIZER.

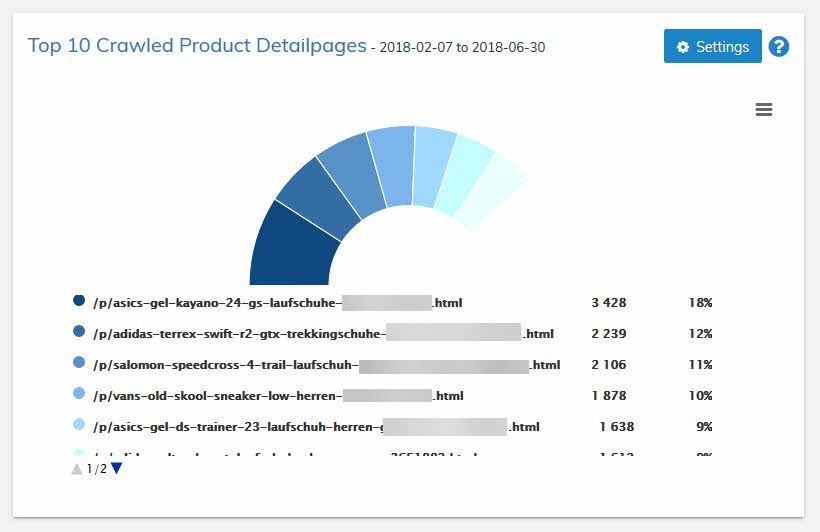

10. Top 10 products

You are hosting a web shop? Then this feature is meant for you. Through a simple once submit of an identifier in SETTINGS you are able to define your products and check which products are crawled the most by Googlebot. This information helps you with further analyses and onsite optimizations.

Note: If you don’t host a web shop, you are able to deposit any identifier in SETTINGS and thus monitor it anyway.

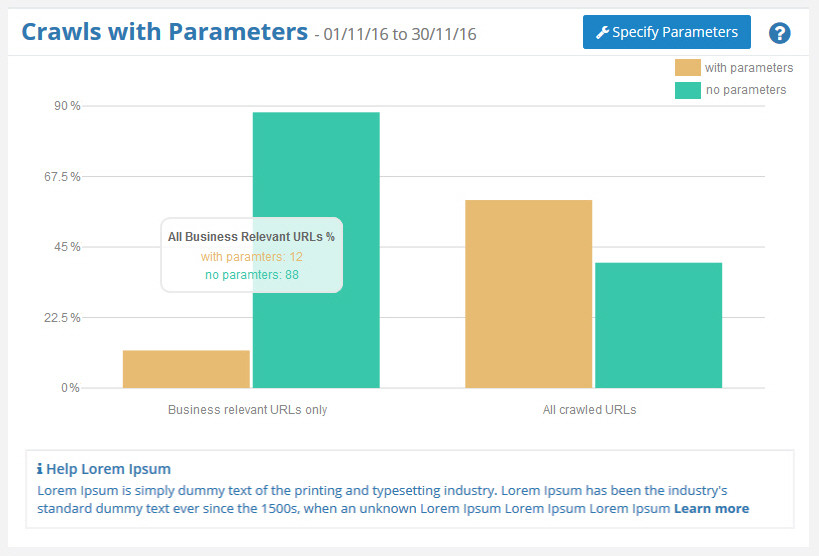

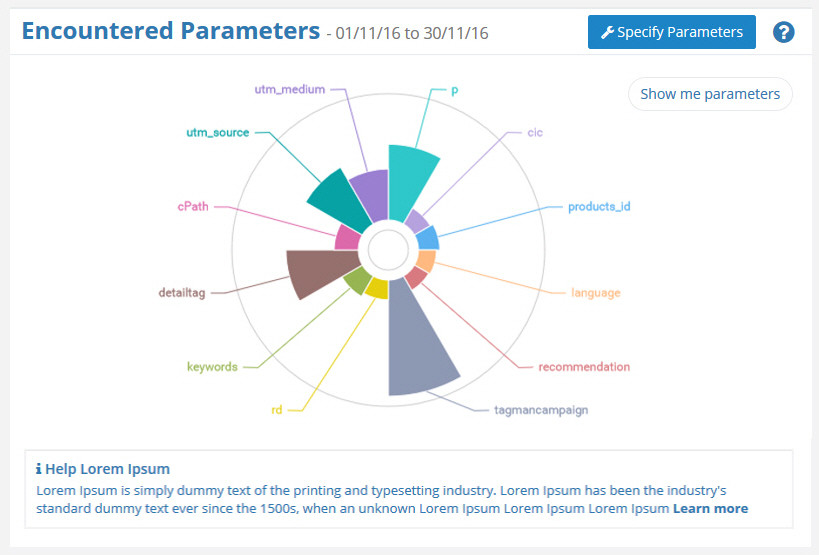

11. Crawled parameters

“Business Relevant URLs” vs. “All URLs”

This graph shows a splitting of requests: Once split in ‘Business Relevant’ and once in ‘All URLS’. The advantage here is that you are able to see the percentage of with parameters crawled Business Relevant URLs in a given period of time. This info helps you with your conscious crawl monitoring.

Note: Like with the other graphs by clicking on any bar you are able to get to the pre-filtered selection in Logfile Explorer (RAW DATA).

Crawled parameters

This graph shows all crawled parameters, crawled in a defined period of time.

Which parameters do significantly influence the content of your website and which can be excluded from crawling? Analyze found parameters with the greatest concern in detail and monitor the bot with a clean website architecture and/or cautiously by using Robots.txt.

Note: If you are actively using Google Shopping and your URLs do include diverse (tracking-) parameters, you must never block these included parameters from being crawled by using Robots.text! A blockage would result in rejection of your Google Shopping ads. The normal (search-) Googlebot crawls your shopping feed.

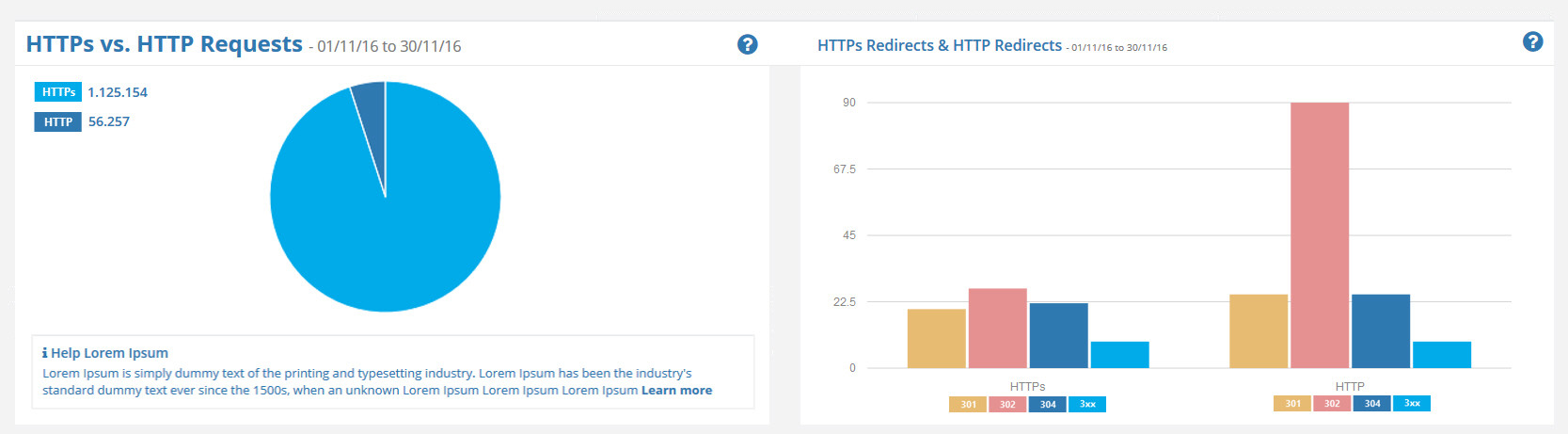

12. HTTP vs. HTTPs crawls

Both graphs show you how frequent your pages under HTTPS or HTTP have been crawled. This information is useful if HTTPS transitions are upcoming, HTTPS transitions haven’t been done correctly or just for simple check afterwards. You can further see which redirect type was used. If you click on a bar in the graph, you are able to display all filtered requests in the Logfile Explorer any time.

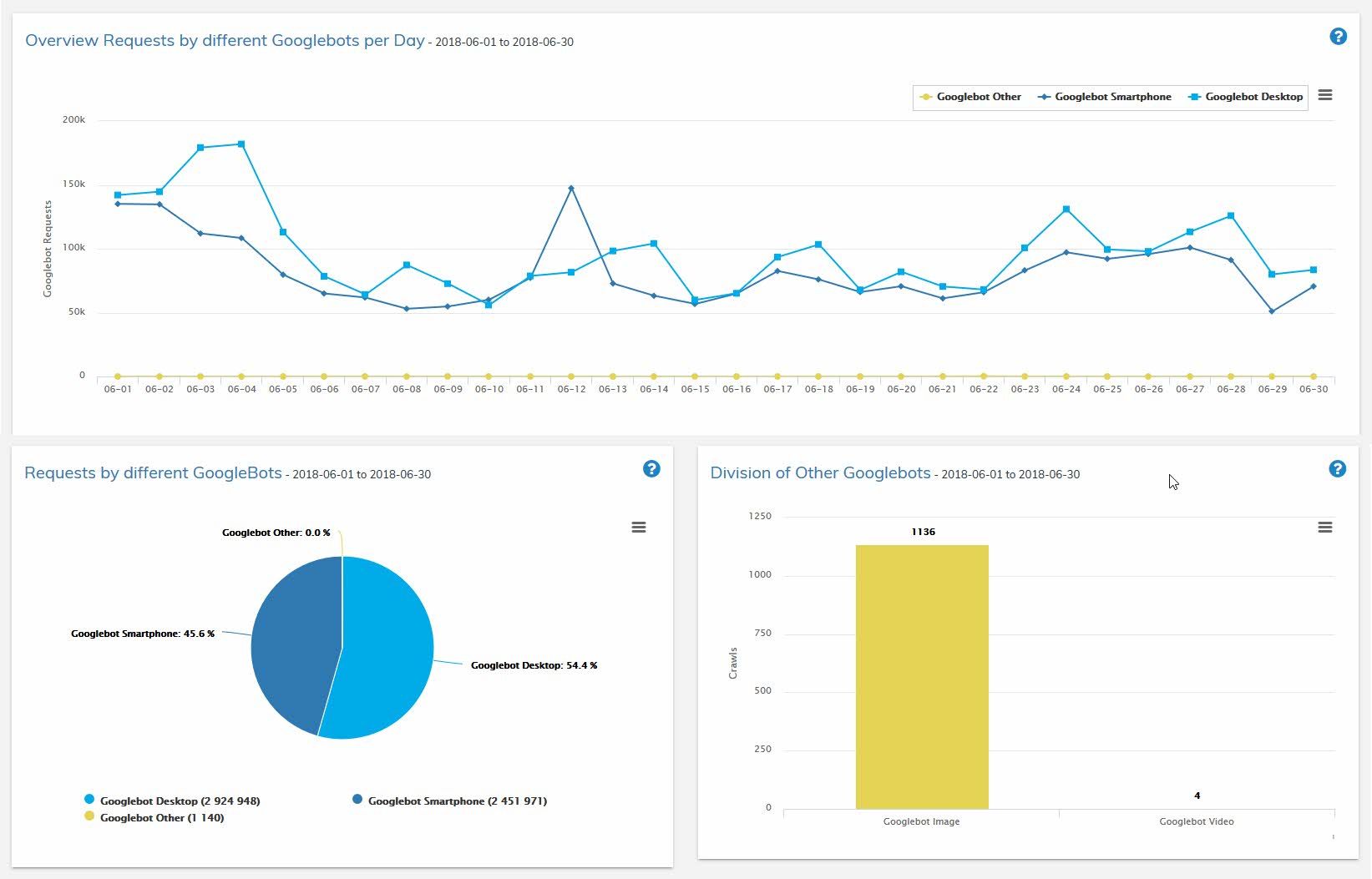

13. Googlebot types

There are many types of Googlebots. Here you can see all Googlebots that have crawled your website and the frequency. We reasonably clustered different Googlebots for you, Googlebot Desktop, Googlebot Smartphine and Googlebot others to be specific.

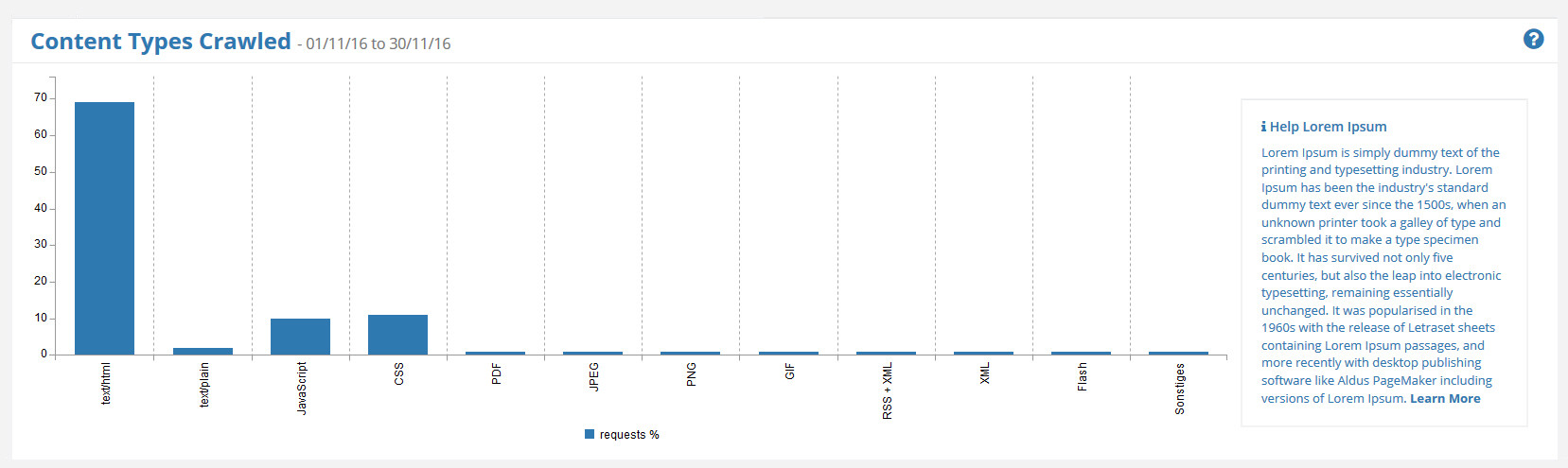

14. Content types

This overview shows crawled content types ordered by frequency one by one.

Got questions left?