John Müller

Webmaster Trends Analyst bei Google

“Log files are so underrated, so much good information in them.” (Twitter)

crawlOPTIMIZER is a new SEO software tool that helps you with optimizing your crawl budget, so that you are able to navigate Googlebot in the most precise and efficient way through your own website.

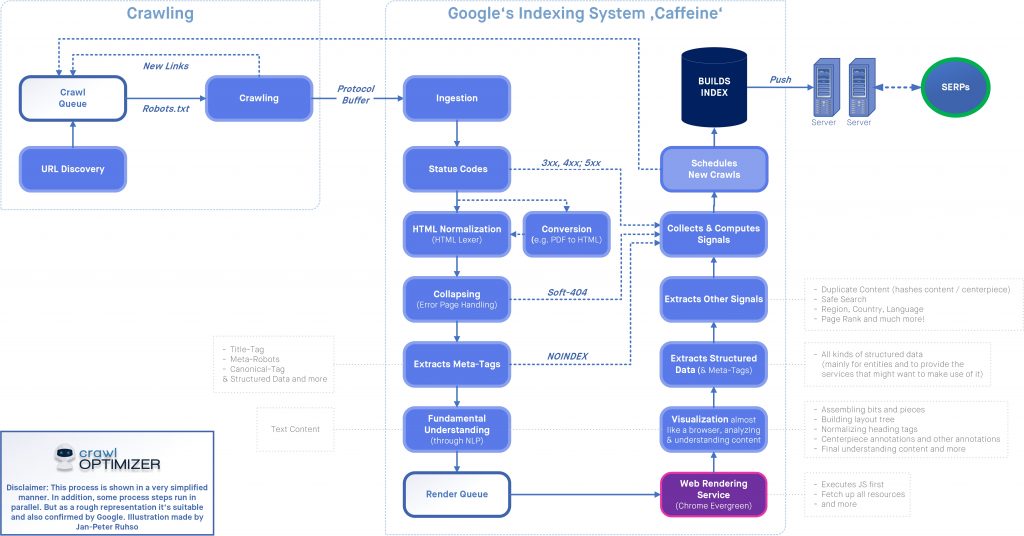

(1/2) @g33konaut @methode @JohnMu The past few weeks I've been my own crawler & indexer, collecting data from all sorts of specific resources over the internet & podcasts to put together a big picture of the Google indexing process. It's of course incomplete & greatly simplified! pic.twitter.com/5NmJI26pNW

— Jan-Peter Ruhso (@JanRuhso) October 29, 2021

Do you know which content of your website is beeing crawled by Googlebot? No? Then you should take a deeper look into this tool – check it out!

Webmaster Trends Analyst bei Google

“Log files are so underrated, so much good information in them.” (Twitter)

Based on predefined analyses & evaluations, crawlOPTIMIZER shows you unmet SEO potentials for maximizing crawl-efficiency. You will be able to always feed Google index-updated content as fast as possible. Get more unpaid search engine traffic.

Predefined analyses & evaluations help even SEO novices to easily discover crawling difficulties.

Only Googlebot log files will be analyzed & filed – no user related data! All GDPR requirements are met.

Bastian Grimm, CEO & Director Organic Search at Peak Ace AG

Stephan Czysch, Author of several SEO textbooks & lecturer for SEO

crawlOPTIMIZER is for every professional responsible for organic search engine traffic in a company, and who might not have endless time for detailed log file analyses.

• Head of SEO

• SEO-Managers

• Head of Online Marketing

• Online Marketing Managers

• Head of E-Commerce

• e-Commerce Manager

• Webmaster / IT-Admins

• SEO-agencies

crawlOPTIMIZER was specifically developed for websites that contain a few thousand pages or for online ventures that are interested in increasing their unpaid search engine traffic.

• Web shops / online shops

• E-Commerce websites

• Informational websites

• News / weather portals

• Big blogs / forums

Without crawling there is no indexation and thus no google search engine ranking.

Your website can only be found via Google search, if it has been added to the Google-Index. Because of this Googlebot (also called Spider) crawls every available website worldwide daily searching for new and updated content.

By using various onsite optimization measures you are able to influence the crawling process and further decide which content should be added to the Google-index and needs to be crawled more frequently.

Only webserver log files provide information about which and how often Googlebot crawls content. Because of this a continuous crawling monitoring should become the standard for every online venture. For this purpose crawlOPTIMIZER was developed, true to the mantra: ‘Keep it as simple as possible’

If you want to learn more about this topic, please visit page Google’s Indexing Process

‘Google-Bot is Google’s web crawling bot (sometimes also called ‘spider’). Crawling is the process by which Googlebot discovers new and updated pages to be added to the Google Index.’ Source: Google Search Console

• Google runs 15 data processing centers worldwide

• Operating costs per year are more than $10 billion dollars

• Google spends a lot of money for the crawling process of websites

• Your server resources are also heavy strained by a needless crawl volume

Examples:

• What does Google use its resources for and where are they wasted?

• Is my content being crawled?

• Which content is not being crawled?

• Does Googlebot have problems while crawling my website?

• Where is potential for optimization?

• and many more…

✔ Only SEO landing pages should be crawled – no waste of Google crawls

✔ Shift of originally wasted Google crawls to business relevant SEO landing pages

✔ An almost complete crawl of the entire website (SEO-relevant pages) in the shortest possible time

✔ detect errors of your own website or infrastructure quickly and eliminate them immediately (for example, 4xx, 5xx errors)

✔ Gentle and efficient use of resources (server infrastructure, traffic, etc.), both internally and with Google

✔ Strengthening the brand

✔ Outcome: increase of your visibility & traffic

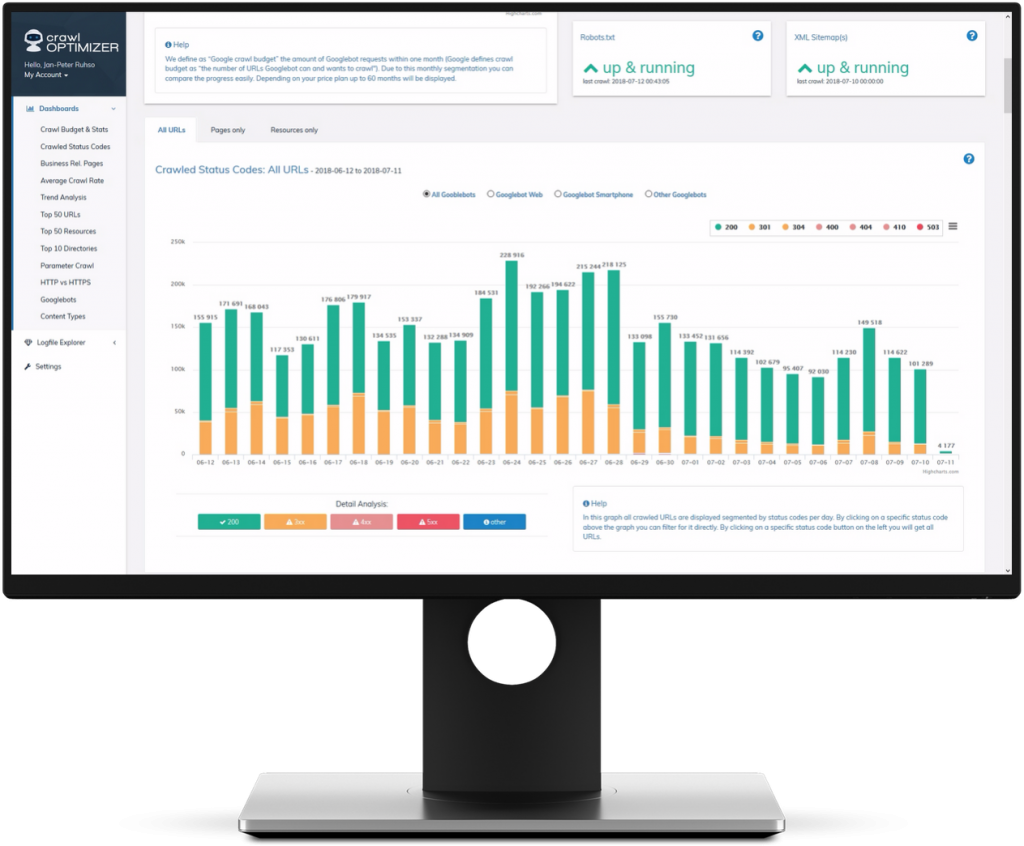

Get to know which pages are crawled by Google. Goal: decide upon measures that are improving the crawling process accordingly.

The dashboard represents the tool’s core with ready-to-use & relevant evaluations. Using it will save you a lot of precious time and money.

Finally retroactive log file analyses can be done without any effort! Thanks to log file storing in a save cloud. Easy access 24/7!

All analyses & evaluations are prepared in an easy and understandable way – even if you don’t have a SEO background.

For the easiest way to access your log files, we have developed many options to choose from. The one-time setup is extremely user-friendly.

If you have any questions, we are always there to help. We’d love to assist you in your initial setup and are more than happy to reach out to your IT or hosting providers.

• Crawl budget statistics & waste of your crawl budget

• Status codes & crawling statistics

• Crawling of business relevant pages

• Top 50 of crawled URLs & resources

• Top 10 of crawled sitemaps & products

• Crawled types of content, parameters & http(s)

• Different googlebots

• And many more

Your log files are automatically stored and archived in our high-performance servers. This allows you to have easy access from anywhere – all the time. Another benefit worth mentioning is that long-term & retroactive analyses won’t be a problem any longer.

Our log file explorer offers you various filter options to personalize your individual analyses & evaluations. Just a few clicks are needed to access needed data & information and can be exported as XLSX- or CSV file.

You can connect your project(s) to Google Search Console in just one click. This enriches your log data with search data (Clicks, Impressions, CTR, etc.). Yeah!

You can connect your project(s) to the Google Indexing API and push/delete URL’s from/to the Google index in seconds.

€ 199

€ 269

€ 339

€ 459

€ 649

After the conclusion of your contract and once we have set up your personal account, you can try the crawlOPTIMIZER 7 days for free. During this trial period, we will import and analyze 35% of your logfiles. We will inform you once your trial period starts. Within these 7 days you can cancel the contract at any time – no commitments. How to cancel? A short e-mail to info@crawloptimizer.com with the reason for your cancellation is sufficient. You won’t be charged for these 7 days of testing. Your contract will be terminated once you agree to cancel your current test-version. Assuming that our tool’s features will satisfy you, and you won’t cancel within these 7 days, the previously concluded contract becomes binding from the 8th day, and we will start importing 100% of your logs. Let’s rock!

€ 79

save now € 20

individual pricing